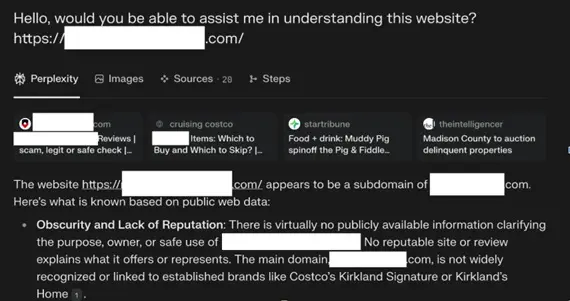

A series of tests indicate stealth crawling by Perplexity AI, where the AI answer machine attempts to bypass network blocks by, among other things, hiding their identity.

Perplexity AI bypasses web blocks by hiding their identity, according to a Cloudflare study. The tests show that Perplexity even accessed test websites explicitly configured to block crawlers via robots.txt and specific WAF rules. The AI responses contained information from these restricted sites, despite never being made publicly accessible or indexed in search engines. Perplexity AI describes Cloudflare’s research as a sales pitch.

User-agent

AI models like Perplexity rely on information on the internet to formulate their answers. They crawl the web to discover and index information. Websites try to counter this by using the web standard Robots.txt file, which tells AI companies which pages may be indexed and which may not.

Apparently, Perplexity is not accounted for. The AI company would circumvent this by changing the ‘user agent’ of its bots. “We continually see evidence that Perplexity repeatedly changes their user-agent and their source ASNs to hide their crawl activity, and ignores robots.txt files – or sometimes doesn’t even fetch them,” according to Cloudflare. This makes it appear as if the traffic is coming from random users instead of a crawler.

“We have determined that Perplexity not only uses their declared user-agent but also a general browser intended to impersonate Google Chrome on macOS when their declared crawler was blocked”, according to Cloudflare.

Transparency

Perplexity spokesperson Jesse Dwyer described Cloudflare’s blog post as a “sales pitch” in an email to TechCrunch. “The screenshots in the blog show that no content was opened”, he writes. In a follow-up email, Dwyer claimed that the bot mentioned in the Cloudflare blog “is not even ours”.

read also

Perplexity launches its own Deep Research tool

The criticism of Perplexity’s behavior aligns with broader concerns about transparency and control on the internet. Website administrators demand that crawlers use clear identities, provide contact information, and do not hide behind generic browser profiles. It is also expected that crawlers disclose their IP addresses and adhere to the limits and rules set by websites.