Nvidia launches the Rubin platform. The Rubin chip succeeds Blackwell and will power new and powerful AI systems from the second half of this year, along with the Vera CPU.

At CES, Nvidia launches the Rubin chip as planned. Rubin succeeds Blackwell and should perform five times better than its predecessor in terms of inference. Rubin contains 336 billion transistors, accounting for 50 petaflops of NVFP4 computing power. The processing speed for training AI models is slightly lower at 35 petaflops, which is about 2.5 times more than Blackwell.

Platform approach

The new chip is part of what Nvidia calls the Rubin platform. That platform also includes the new ARM-based Vera CPU. It includes 88 Olympus cores, developed by Nvidia itself and compatible with Armv9.2. The chip relies on NVLink-C2C as interconnect. The name of both chips comes from Vera Florence Cooper Rubin: an American astronomer.

Nvidia combines the Rubin accelerator and the Vera CPU into Vera Rubin: a tandem that succeeds Grace Hopper. Vera Rubin NVL72 is a complete server rack in which the hardware is combined into an AI-HPC system. The parts of the rack communicate with each other via the sixth generation of NVLink. The ConnectX9-SuperNIC, a network accelerator, is also part of the design, along with the BlueField-4-DPU.

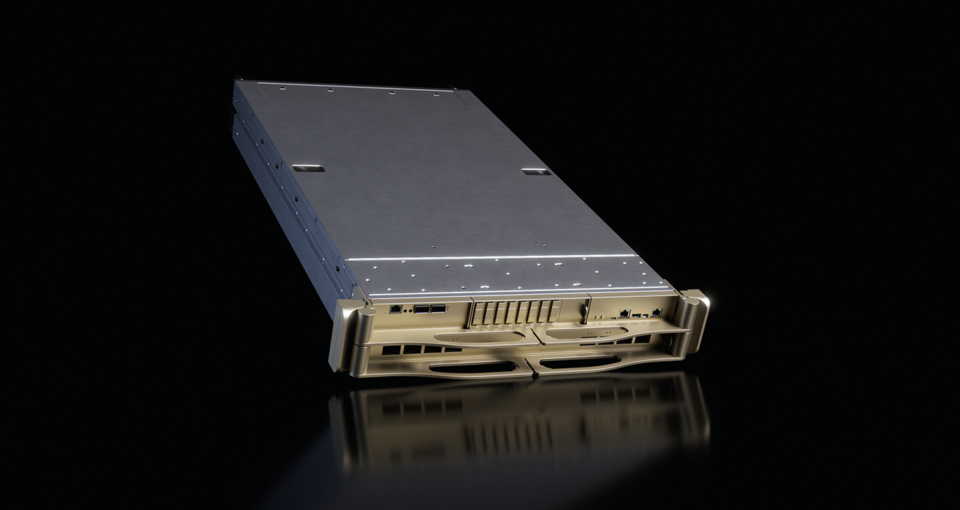

Nvidia also packages Rubin in a smaller system: DGX Rubin NVL8. That is a more classic server with eight Nvidia Rubin GPUs on board, combined with dual socket Intel Xeon 6776P-CPU. The x86-based

SuperPOD

Finally, Nvidia provides the architecture to roll out Vera Rubin NVL72 or DGX Rubin NVL8 on a large scale and combine them into clusters. This is done via Nvidia DGX SuperPODs. A single Nvidia DGX SuperPOD can include eight Nvidia DGX Vera Rubin NVL72 racks, or 64 DGX Rubin NVL8 servers.

With Rubin, Nvidia is once again targeting the major AI producers in the world. Among others, OpenAI, Microsoft, AWS and Google are waiting for the powerful hardware, as are Meta and Oracle. Manufacturers including HPE, Dell and Lenovo also want to embrace Rubin.

read also

Who will cool and power AI server racks of 1 MW (and how)?

Nvidia claims higher efficiency with the new components. The price per inference token would fall by a factor of ten compared to the previous generation. At the same time, Rubin enables a higher density of systems. Water-cooled clusters with power per rack of 200 watts are coming into view with this hardware. The hunger for more AI computing power and, consequently, more power, will not subside with the introduction of Rubin.

The Rubin chips are reportedly rolling off the production line in volume at the moment. The first solutions with Rubin on board should become available through partners in the second half of 2026. All major cloud providers plan to put Vera Rubin instances on the menu later this year.