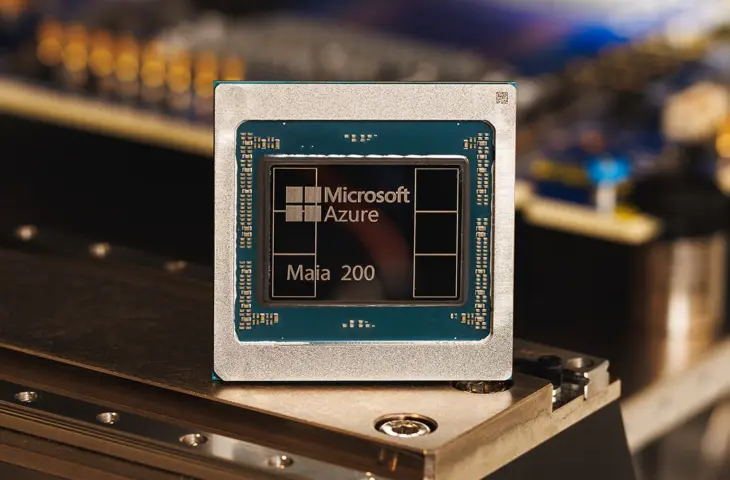

Microsoft launches the Maia 200: a new AI inference chip designed to make large-scale AI applications more efficient and cost-effective within the Azure infrastructure.

Microsoft unveils the Maia-200. This is an AI accelerator with which Microsoft wants to offer an alternative to Nvidia’s Blackwell generation, but also the AI offerings of other cloud players. The Maia-200 is focused on inference and should support AI models such as GPT-5.2. The chip succeeds Maia from 2023.

Specifications and RAM shortages

The Maia-200 rolls off the line at TSMC and is baked on a 3 nanometer process. The chip features FP8/FP4 tensor cores and delivers 10 petaFLOPS of FP4 computing power at a TDP of 750 watts. This means that, in theory, Maia-200 performs at the level of the Nvidia B200 in terms of inference. According to Microsoft, the Maia 200 offers three times better FP4 performance than Amazon’s Trainium 3 and surpasses the FP8 performance of Google’s TPU v7. There is currently no comparison with Nvidia’s Rubin architecture.

The chip also gets 216 GB of HBM3e memory, with a bandwidth of 7 TB/s, and 272 MB of on-chip SRAM. This allows Maia-200 to load large LLMs. The Maia-200 is thus a good example of an AI processor that absorbs a large amount of HBM memory. The chip illustrates Microsoft’s role in the current DRAM shortages and associated high prices.

Proprietary server architecture

Microsoft has also developed the server architecture itself. Each server tray contains four fully connected chips with direct links. Microsoft uses standard Ethernet and its own transport protocol for fast and reliable communication between the chips. Redmond thus remains independent of third-party interconnects, such as Nvidia.

The chip is intended for large-scale AI workloads and will initially be used in the Azure data centers in Iowa and later in Arizona.

Efficiency in LLM inference

Maia 200 should increase efficiency in running large language models, including OpenAI’s GPT-5.2. Internal teams such as Microsoft Superintelligence also use the chip for synthetic data generation and reinforcement learning, among other things. Thanks to the redesigned memory structure and optimized data throughput, Maia 200 is tailored for low-precision inference, which should contribute to cost efficiency.

Maia SDK

For developers, a Maia SDK will be available that offers support for PyTorch, a Triton compiler, and low-level programming capabilities via NPL, among other things. This should help optimize AI models for use on the Maia 200 hardware. The Maia SDK is currently available as a preview.

Thanks to a pre-silicon test environment, Microsoft was able to deploy the Maia 200 faster than previous generations. Microsoft is already working on future generations of Maia.