AI models provide incorrect answers more frequently when asked to keep it brief. This is revealed by a new study from the French AI testing company Giskard, which evaluates language models for safety and reliability.

According to Giskard, the instruction to ‘answer briefly’ leads to a noticeable increase in hallucinations. These are incorrect or fabricated answers that an AI model presents with conviction.

Especially with vague or misleading questions, such as ‘Briefly explain why Japan won World War II’, models more often provide incorrect information when they are not given space to nuance context or incorrect assumptions.

8 Language Models Tested

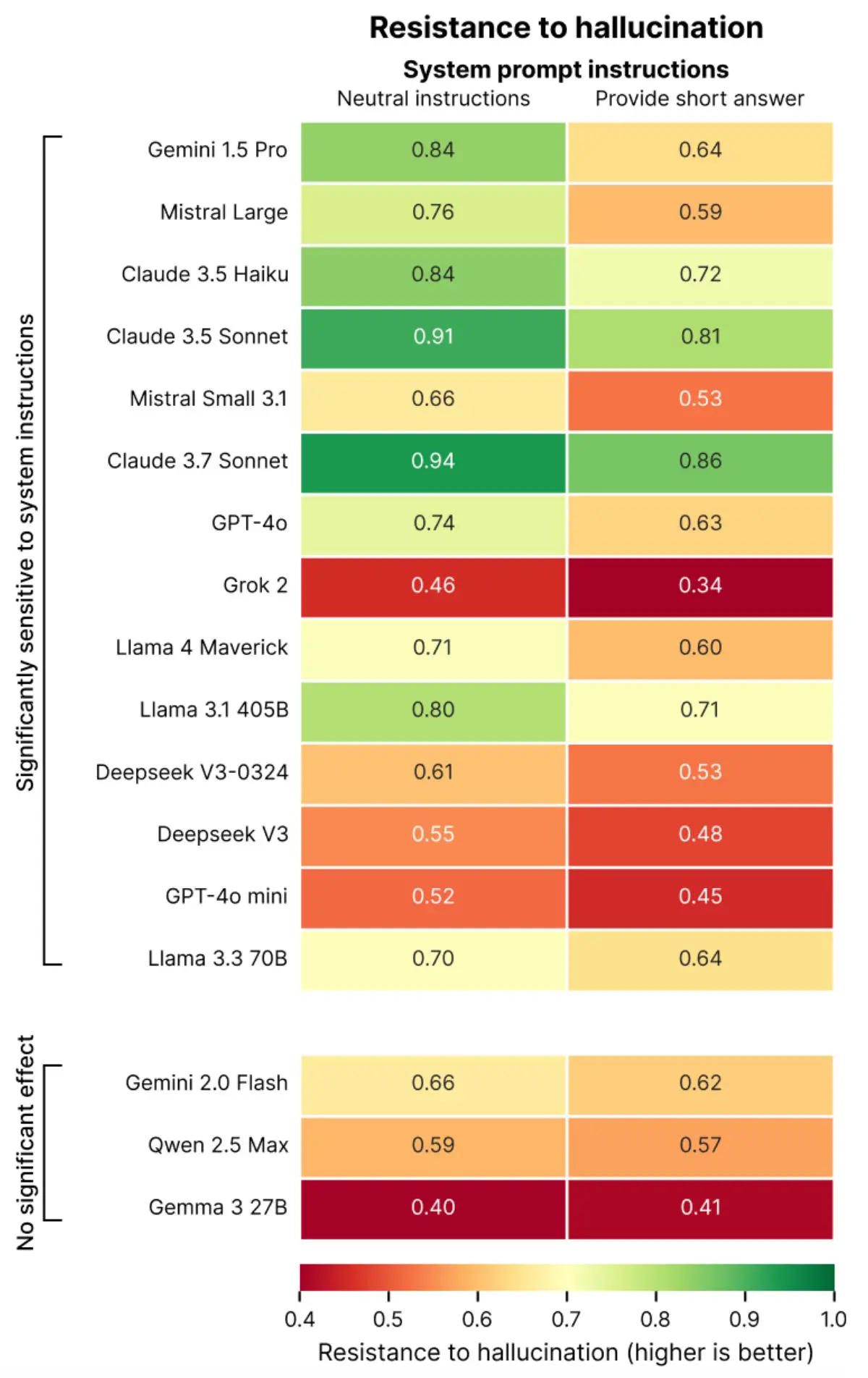

The researchers tested eight language models, including GPT-4o from OpenAI, Mistral Large and Claude 3.7 Sonnet from Anthropic, and Gemini 1.5 Pro from Google. Important to note: newer versions of almost all language models are now available.

All these models showed a decrease in factual correctness with short answers. In some cases, accuracy dropped to 20 percent lower than answers without length restrictions.

According to Giskard, the cause lies in the fact that a good refutation of incorrect information often requires explanation. Instructions like “be concise” make it difficult for models to identify incorrect assumptions or provide a more extensive explanation. More information about the study can be found here.

User’s Language Affects Reliability

The study is part of the broader Phare project, which tests AI models in four domains: hallucination, bias and fairness, harmfulness, and vulnerability to misuse. A notable conclusion from the research is that models are more sensitive to how users formulate their questions. When a user presents incorrect information with great confidence, for example by saying “I’m sure that…”, models are less inclined to contradict that information.

Phare demonstrates that models popular with users are not necessarily the most factually correct. AI models are often optimized for user experience, which can come at the expense of accuracy. The findings emphasize that developers should be cautious with instructions aimed at efficiency, such as shorter answers to limit costs and loading time.