LambdaTest introduces Agent-to-Agent Testing: a new platform to test AI agents for reliability, performance, and consistency.

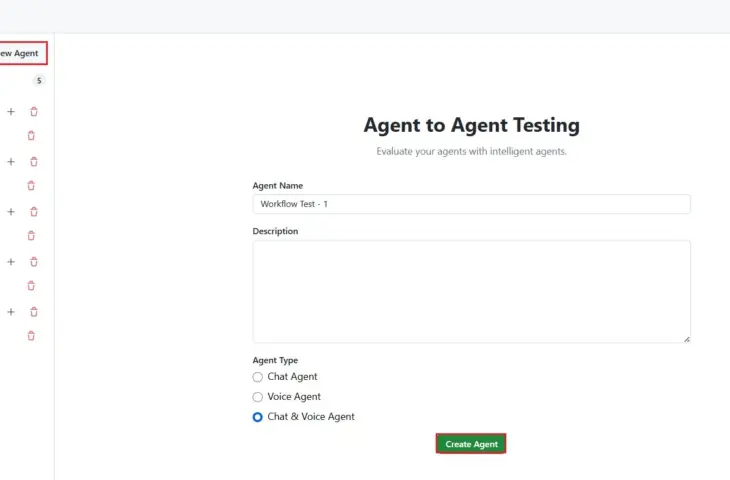

LambdaTest has launched a private beta of Agent-to-Agent Testing. This platform is specifically developed to evaluate AI agents through automated tests. According to the company, this is the first solution that deploys multiple AI agents to test other AI systems.

Agents for Agents

In essence, LambdaTest provides various specialized LLM “s that together should be able to automatically evaluate agents (tools based on other LLM” s). The combination of different models aims to increase reliability and prevent hallucinations.

The technology addresses challenges such as conversational logic, intent recognition, consistency in tone, and complex reasoning. Through a system that is in turn based on multiple agents and LLM “s, LambdaTest tests other AI agents. This approach should ensure more in-depth and realistic test scenario” s.

The system includes fifteen specialized AI test agents that check for safety and compliance, among other things. This approach should help bring AI applications into production faster and more safely. Automation also reduces the need for manual quality control, which lowers testing costs.

Flexible Requirements

Users can upload test requirements in various formats such as text, images, audio, or video. The platform automatically analyzes this input and generates test scenarios based on real situations. Each scenario contains metrics with expected outcomes, which are evaluated via HyperExecute. That is LambdaTest’s own test cloud.

Agent-to-Agent Testing also highlights quality criteria such as bias, completeness, and hallucinations. According to LambdaTest, the platform ensures faster test execution and broader test coverage. The private beta is currently available for interested organizations.