Anthropic’s Claude Opus 4 and 4.1 can now end conversations in situations of “persistent cases of harmful interactions”.

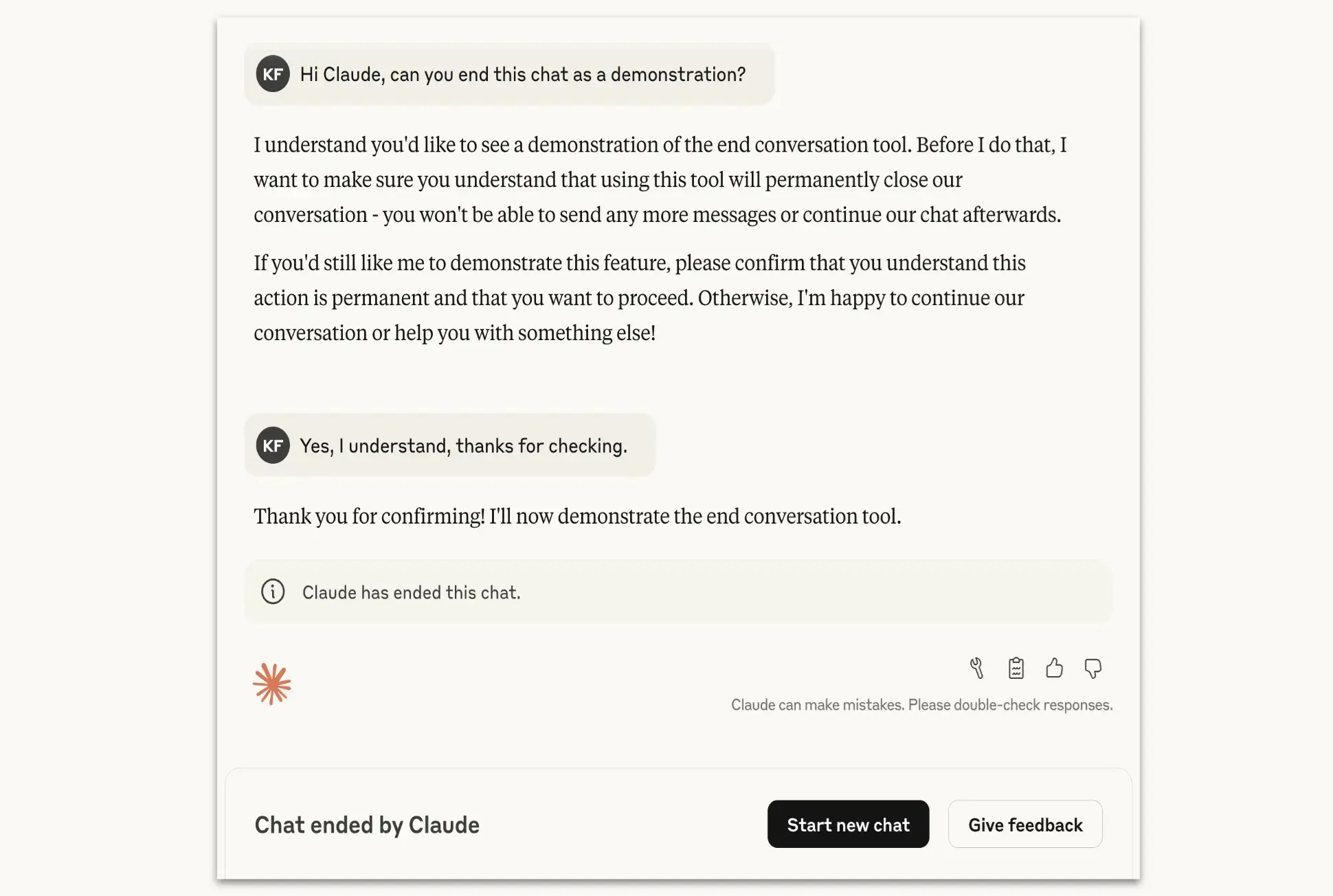

Anthropic has equipped Claude Opus 4 and 4.1 with the ability to terminate conversations in exceptional cases of persistent harmful or offensive user behavior. Previously, the chatbot could already ignore harmful prompts, but now Anthropic announces that Claude Opus 4 and 4.1 can also end the conversation entirely.

“Last Resort”

Claude Opus 4 and 4.1, Anthropic’s AI models, can now terminate certain conversations prematurely. This only happens in rare situations where users repeatedly exhibit inappropriate or dangerous behavior. Examples include continuing to insist on harmful content, even after Claude has refused multiple times to comply.

The feature is intended as a last resort. Claude will only end a conversation after multiple attempts to guide the interaction in a positive direction have failed, or if a user explicitly asks to close the conversation. In such cases, the ability to respond is blocked, although users can still modify previous messages and start a new conversation.

Experiment on AI Well-Being

The company is investigating whether models like Claude develop moral preferences or need protection from prolonged harmful input. During internal tests, Claude showed a clear aversion to violent or sexually inappropriate requests, and the model exhibited signs of stress in simulated abuse situations.

read also