Nvidia introduces Spectrum-XGS Ethernet to make multiple, distributed data centers work together as a single AI environment.

Nvidia wants to connect distributed data centers into enormous clusters. To that end, it announces Nvidia Spectrum-XGS Ethernet at the Hot Chips conference in the US.

Across Locations

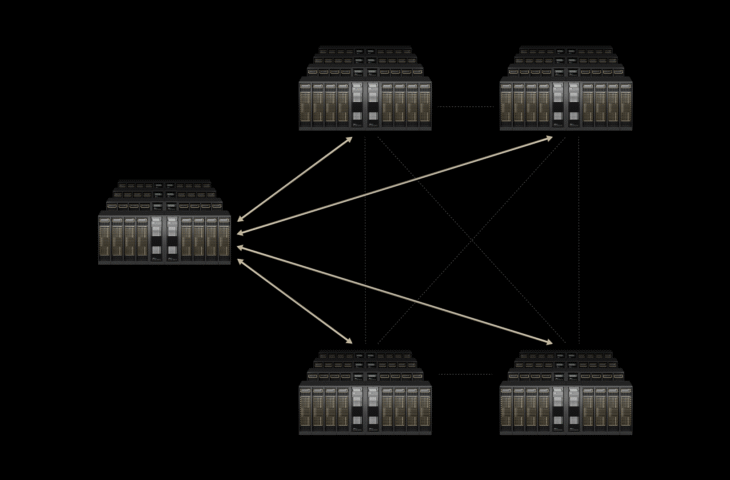

Spectrum-XGS adds a scale-across layer to the existing Spectrum-X architecture. Data centers at different locations, connected via Spectrum-XGS, function as a single cluster. Nvidia cites distance-dependent congestion control, precise latency management, and end-to-end telemetry as core functions. The technology should accelerate collective GPU communication and keep performance predictable over longer distances.

Spectrum-XGS is embedded in the broader Spectrum-X platform with Spectrum-X switches and ConnectX-8 SuperNICs. The platform aims for higher bandwidth density and lower latency than standard Ethernet in multi-tenant AI environments. According to Nvidia, the improvements nearly double the performance of NCCL communication across multiple nodes and locations. Spectrum-XGS is immediately available within the Spectrum-X portfolio.

Evolution of Spectrum

Spectrum-XGS Ethernet builds on Spectrum-X, introduced in 2023, Ethernet tailored for AI traffic. The photonic innovations from Spectrum-X Photonics and Quantum-X Photonics lay the foundation within a single data center location. Co-packaged optics in the switches deliver high port speeds and better efficiency. This increases bandwidth and signal integrity for AI clusters with many GPUs.

Spectrum-XGS adds scale-across functionality to this across multiple locations. The result should be a single AI cluster, spread across multiple locations. Nvidia reports that customers are already lined up to connect data centers using this technology.