NetApp introduces a data platform specifically developed for AI applications at scale. The platform consists of new AFX storage architecture and an AI Data Engine, closely integrating with Nvidia.

NetApp unveils a new enterprise-level AI data platform. The data specialist focuses on exabyte systems and embraces Nvidia as a crucial technology partner. Specifically, NetApp connects its own scalable storage systems with Nvidia’s DGX AI computers.

NetApp AFX

The new AFX systems are at the core. These systems decouple high-performance flash storage capacity from performance. They run on a customized version of NetApp Ontap and are designed for AI workloads. The storage servers are certified for use with Nvidia DGX SuperPOD clusters, which provide the AI computing power.

According to NetApp, the AFX systems scale linearly up to 128 nodes. This enables support for exabytes of data and high bandwidths. Organizations can optionally deploy DX50 control machines for metadata indexing. NetApp emphasizes the possibility of multi-tenant usage and integration between on-premises and cloud environments.

AIDE

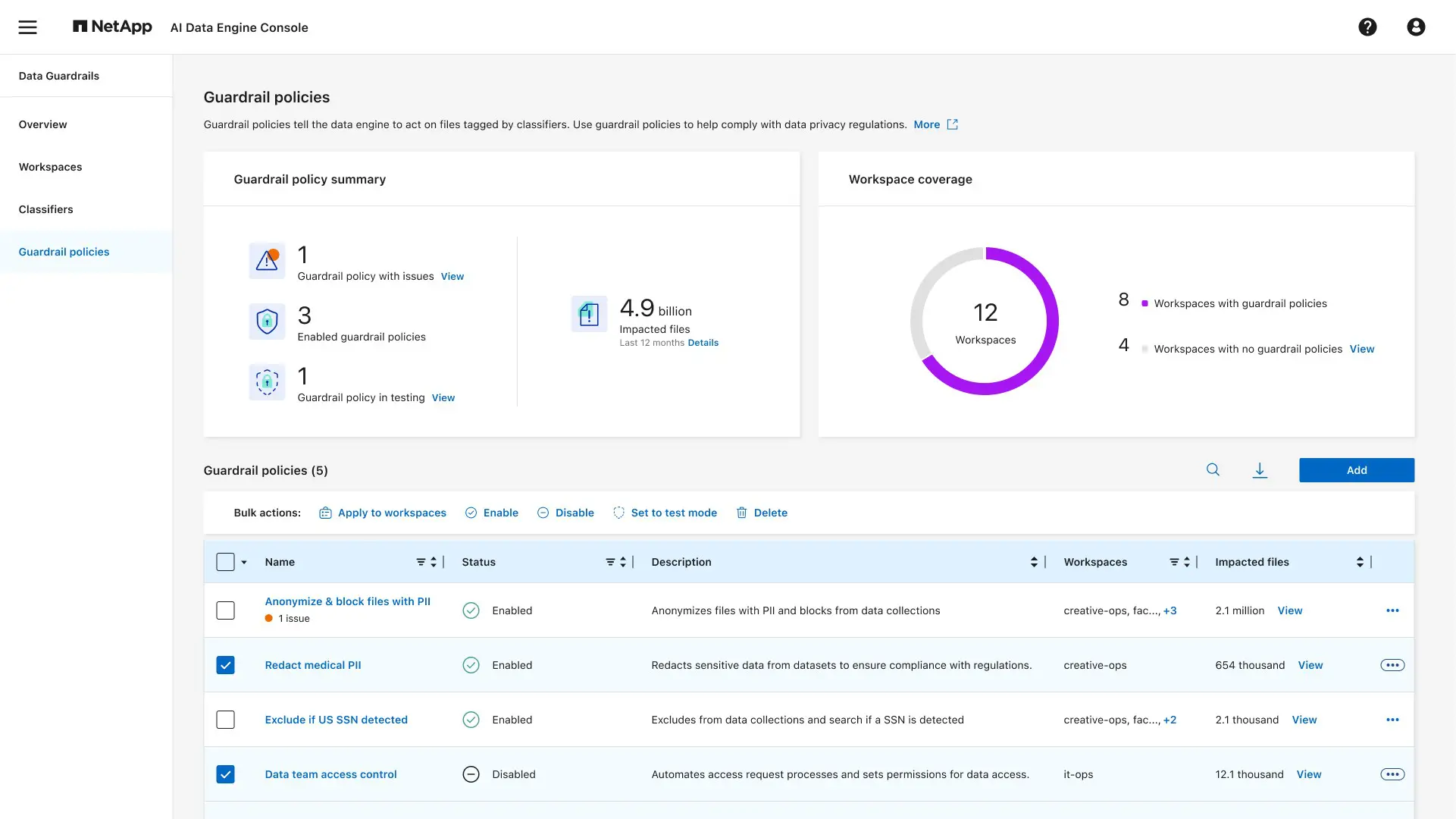

The NetApp AI Data Engine (AIDE) is a second pillar of the enterprise AI data platform. AIDE is an extension of Ontap and is integrated with the reference architecture of the Nvidia AI Data Platform.

This solution manages the complete AI data flow: from ingestion to data preparation and delivering data to AI applications, both on-premises and in the cloud. AIDE automatically detects data changes and synchronizes data across different environments. It provides support for vectorization, semantic search, and data management.

The AI Data Engine runs locally on AFX systems with the optional DX50 nodes. In the near future, NetApp also plans integration with Nvidia RTX Pro servers, including the edition with RTX Pro 6000 Blackwell Server Edition GPU.

Azure Integration

In addition to the new systems and services, NetApp also introduces updates for Azure NetApp Files. A new REST API allows datasets on ANF to be directly accessible via object storage protocols. This enables existing data to be used in Azure OpenAI, Azure Synapse, and Azure Machine Learning without creating additional copies.

Finally, the global namespace within Azure has also been expanded. With FlexCache, customers can virtually merge data from different Ontap storage systems (local or in the cloud). This data is only transferred when needed. Through SnapMirror, datasets and snapshots can be quickly moved for backup, disaster recovery, or workload distribution.

With this launch, NetApp aims to position itself as a specialist in high-performance data solutions supporting enterprise-level AI. With the AFX systems, AIDE, and integration with Nvidia, NetApp offers a high-performance solution to connect exabytes of (local) data to Nvidia’s accelerated AI hardware. NetApp strives to be comprehensive, while keeping cloud data integration in focus.