With the latest release of Data Services, Cloudera allows organizations to deploy generative AI in their own data center within the Cloudera ecosystem.

Cloudera introduces Private AI on-premises with its latest release of Cloudera Data Services. This enables companies to deploy generative AI within their own data center, behind the firewall.

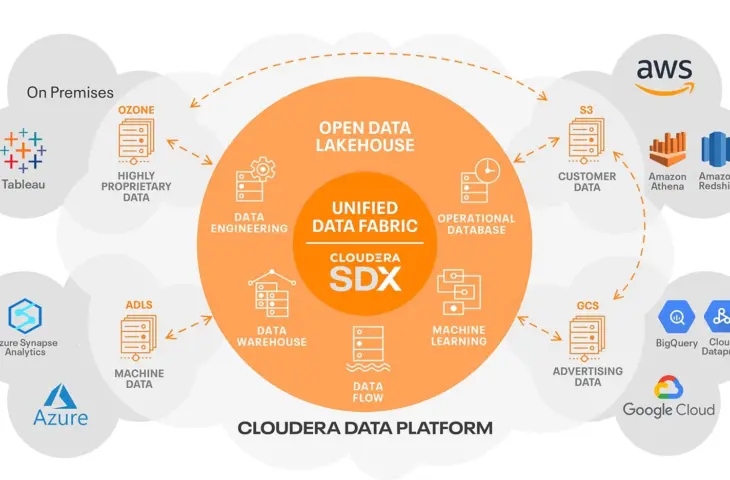

This offers more control over security, data integrity, and intellectual property, it is said. The new services run on GPU-accelerated infrastructure and use cloud-native technology without requiring data to move to the public cloud. Cloudera aims to provide these capabilities to companies themselves, so they are not tempted to leave the Cloudera ecosystem.

From Cloud to On-Prem

The release includes Cloudera AI Inference Services and Cloudera AI Studios, now also available on-premises. Both solutions were previously only accessible in the cloud. The AI Inference Service is built with Nvidia technology and helps implement and manage AI models in production. AI Studios focuses on developing GenAI applications through low-code templates, allowing less technical teams to get started.

The tools run in the customer’s data center, where the data is already present. This reduces risks around data protection and allows for the development of sensitive AI applications without external dependencies. Scalability is maintained thanks to the cloud-native architecture that is now deployable locally.

With this release, Cloudera aims to meet the growing demand for AI within a controlled, local environment. The company also wants to play an important role within that context.