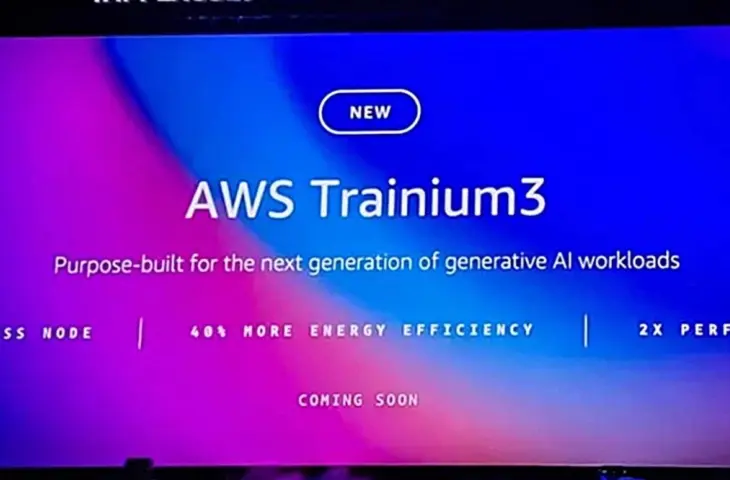

AWS launches Trainium3: chips that cost less and can execute more extensive tasks.

During re:Invent, AWS launched its new Trainium3 chip, further escalating competition with Nvidia. The tech giant is making its Trainium3 UltraServers immediately available, combining up to 144 chips. According to AWS, they deliver 4.4 times more computing power and nearly four times the memory bandwidth of the previous generation. CEO Matt Garman called the servers “our most advanced ever, with 4.4x more compute and 3.9 times more memory bandwidth.”

More power, less cost

AWS claims that tests yield up to 50 percent cost savings compared to GPU training. Companies like Anthropic will be among the first to use them. The infrastructure behind Trainium3 is scaled via EC2 UltraClusters 3.0, connecting thousands of UltraServers. According to Garman, this enables tasks “that were simply not feasible before,” such as multimodal models on trillion-token datasets.

Trainium4 already in development

Meanwhile, Nvidia remains the market leader with a 90 percent share, although analysts predict this will decline in the coming years. AWS is already working on Trainium4, which is expected to offer six times more computing power and support for Nvidia’s NVLink Fusion. Garman stated that Trainium chips are increasingly used within AWS: “The majority of inference in Amazon Bedrock already runs on Trainium.”