AI company Anthropic is making significant changes to its privacy policy. Data will now be used for training unless you opt out .

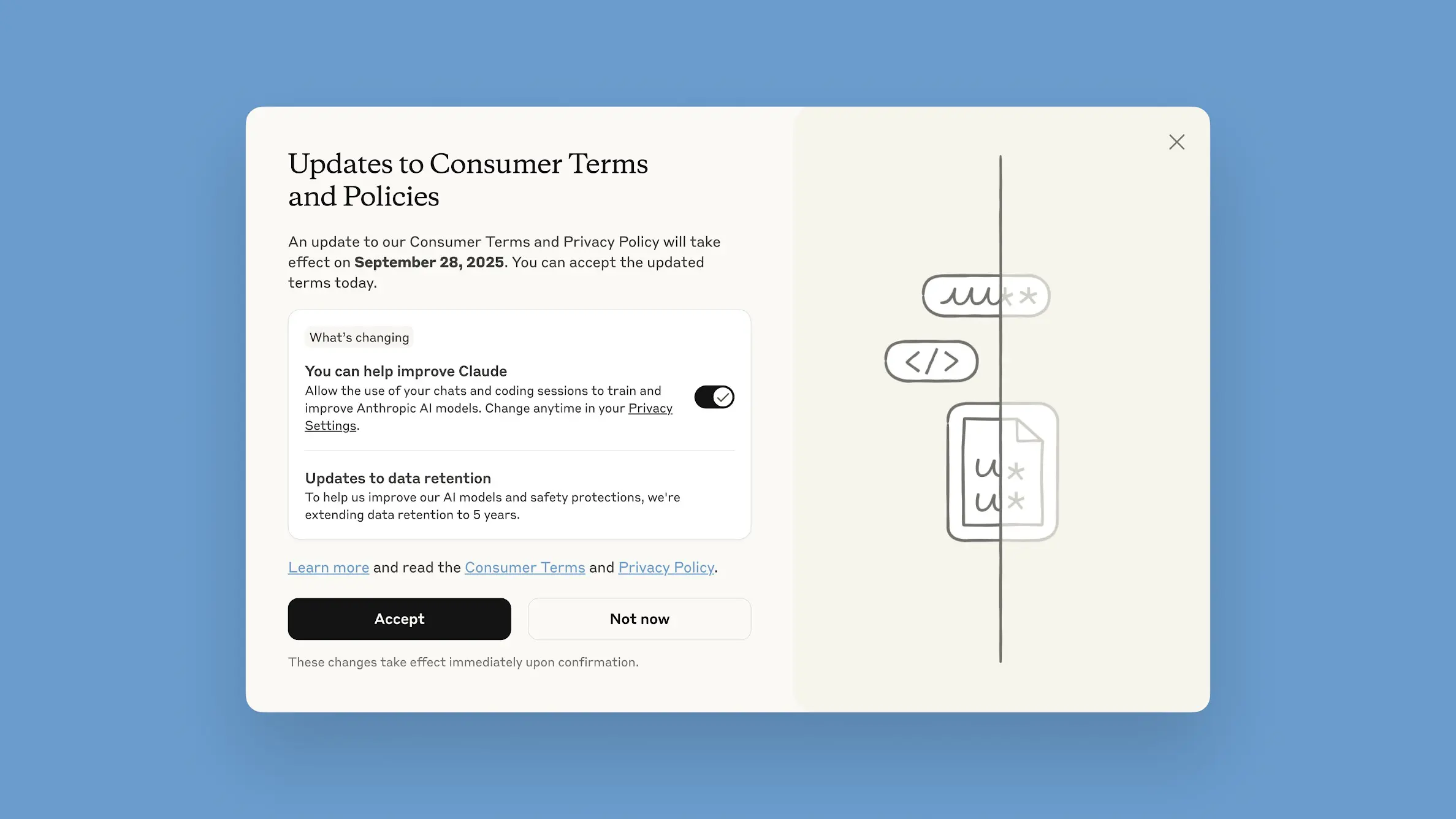

Users of Claude must explicitly confirm before September 28 whether their conversations may be used to train AI models. If not, their data remains untouched. Those who take no action automatically consent.

Conversation Data Stored for Five Years

Until now, conversations from both free and paying Claude users (such as Claude Pro and Claude Max) were not used for training. From the end of September, that policy changes: conversations, including code prompts, may be used to improve future AI systems. Data from users who don’t opt out will be stored for five years. That’s much longer than the previous thirty days.

New account holders will immediately be asked if training data can be shared. Existing users will only see a pop-up with “Updates to our terms of use” and a prominent “Agree” button. The toggle to consent to data sharing is on by default and, according to TechCrunch, is displayed smaller and more subtly, which can lead to confusion. Many people don’t read the ‘fine print’ before agreeing to something.

Competitive Pressure and Lawsuits

Anthropic says users contribute to “safer and better AI” if they share their conversations. The need for training data likely plays a major role. AI companies like Anthropic, OpenAI, and Google compete fiercely and need enormous amounts of realistic input. Meanwhile, the number of lawsuits is growing: The New York Times, for example, sued OpenAI regarding data retention.

The choice lies with the user: tacitly consent or explicitly refuse. But choosing without being truly well-informed is becoming increasingly difficult in this rapidly evolving sector.

read also