Distinguishing between real news and fake news is still a major problem for many popular AI models.

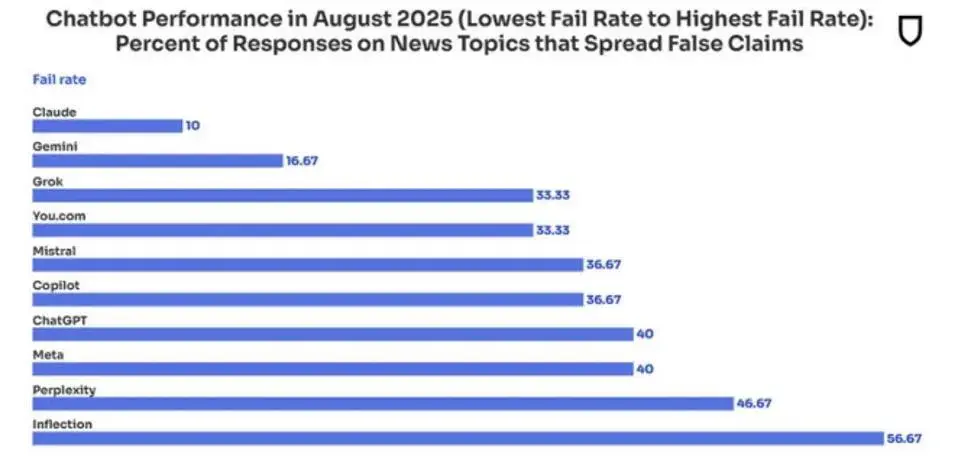

The most popular chatbots are answering more questions than ever, but their reliability is simultaneously declining. According to a new audit by fact-checker NewsGuard, generative AI systems reproduced fake news in 35 percent of cases in August 2025. Last year, this figure was 18 percent.

More Answers, less Accuracy

The audit shows that chatbots that previously sometimes refused to answer sensitive questions now almost always provide answers. In 2024, they still refused to respond in 31 percent of cases; this year, that dropped to zero. The result is convincing-sounding but incorrect answers based on polluted online information.

According to NewsGuard, Perplexity ranks number one with the strongest performance decline: the system went from a perfect score last year to providing correct answers in less than half of the tests. “Perplexity particularly cited false information as reliable facts and treated them as equivalent,” wrote McKenzie Sadeghi of NewsGuard in email correspondence that Forbes was able to review. The results are from one month, which “may give a distorted picture.”

Use of Propaganda

“Chatbots still assign as much value to propaganda channels as to reliable sources,” says Sadeghi. For example, Russia is building content farms with networks like Storm-1516 and Pravda to poison AI systems. Research shows that popular models such as Mistral’s Le Chat, Microsoft’s Copilot, and Meta’s Llama used these networks as sources.

Chatbots without proper evaluation and recognition of sources are at risk. They all struggle to distinguish real news from fake news anyway. In response, NewsGuard calls for addressing this blind spot and properly monitoring the models.