RAG, short for Retrieval Augmented Generation, is almost inseparably linked to the use of generative AI in the workplace. What is RAG, and why is it such an essential component of a useful AI implementation?

A generative AI assistant without retrieval augmented generation (RAG) is like an enthusiastic digital intern with a people-pleasing attitude. Ask that assistant something, and it will do its utmost to provide an answer, even when it doesn’t know the answer.

Generative AI combined with RAG is like that same diligent intern, but this time armed with an encyclopedia filled with relevant knowledge. Ask the same question, for which the ready knowledge is insufficient, and the intern will consult the encyclopedia in search of the real answer.

In the first case, we refer to this as a hallucination in generative AI: you get an answer that sounds correct, but isn’t. In the second scenario, the AI assistant has used RAG to arrive at a real answer through the right information.

Why is RAG necessary?

Generative AI is built on Large Language Models (LLMs). LLMs are models such as GPT 4.5 from OpenAI, or Llama 3.3 from Meta. They were developed primarily by American technology giants and trained on enormous AI supercomputers. Training of an LLM happens using training data. By feeding a neural network immense amounts of annotated data, connections are formed between digital neurons, allowing the network to eventually respond correctly to new data.

Show such a neural network thousands of photos of cats and dogs, and after a while, it can recognize the animals itself in new images. You can also think bigger like OpenAI and use half the internet’s articles, forum posts, digital books, blogs, and more as training data. Train a network with data at scale, and you get a model that makes ChatGPT possible.

LLMs are trained using such immense amounts of general and historical data. Based on that data, they are able to formulate answers to questions that sound very realistic. However, once a model like GPT 4.5 is finalized, the training is over. Think of a student who graduates and starts working. The lessons are over, so additional (new) information will no longer be linked to the student and the diploma.

The student starts working with the knowledge gained at graduation. In the same way, the LLM gets to work with the knowledge it has acquired during training. Using a trained neural network is called inference. In the inference phase, the LLM is no longer aware of data created after finalization. Consequently, an LLM cannot answer current event questions on its own.

- An LLM is trained on a multitude of general data and has no knowledge of company-specific information or data that was not part of the training process.

Training with your own data

OpenAI, Meta, Microsoft, and other parties hopefully do not have access to your specific company data. Consequently, an LLM is not trained on that data. If you ask for a description of product X or information about customer Y, the LLM will at best say it doesn’t have that knowledge, or at worst, hallucinate a good-sounding answer.

To deploy an LLM in a business context, the model must have knowledge of all important data in your company. Think of the product catalog, customers, financial results, and more. You can fine-tune the model for this purpose.

Fine-tuning is like additional training for the intern on the job itself. In this case, you further train the model. However, this again requires expensive AI computing power and the necessary knowledge. It takes a long time and costs a lot of money. Again, you will finalize the model at some point. Two days later, you might launch a new product or secure a new customer, and you can start over.

Fine-tuning is relevant to give generative AI models background knowledge about your company, but it doesn’t solve the core of the problem. You still can’t ask a question with the guarantee of getting a correct and up-to-date answer.

- Training models on your data or fine-tuning is a complex and expensive process, and it doesn’t solve the problem: new and changed data that weren’t part of the training are unknown to the LLM.

Retrieval Augmented Generation

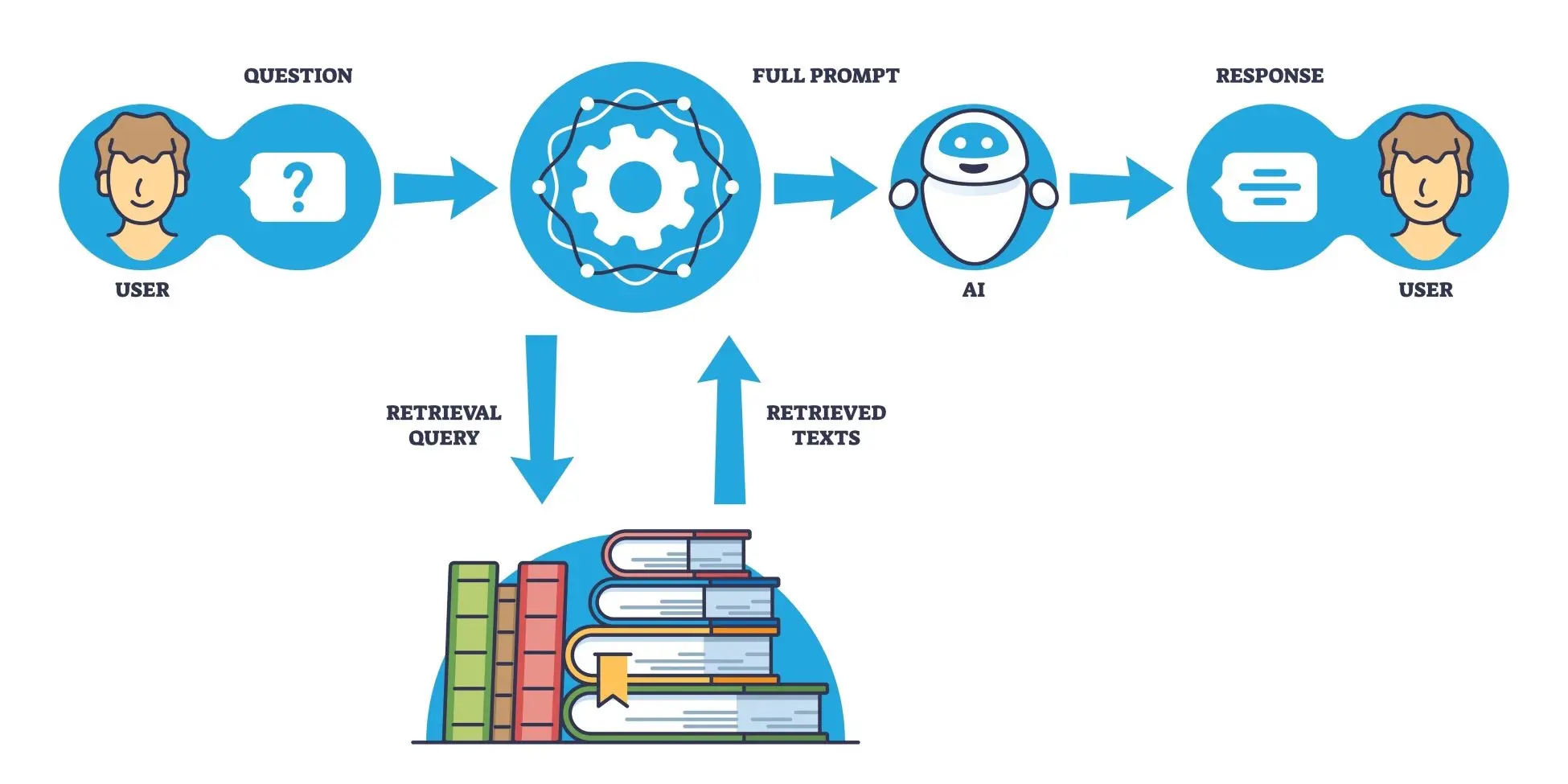

RAG takes a different approach. RAG refers to a method where the LLM isn’t further expanded, but rather the question you ask is. If you want to know something about your organization’s products, you simply ask the question: ‘What’s the difference between product X and Y’.

Before that question goes to the LLM, RAG springs into action. Another algorithm sees from the prompt that your question is about products. This algorithm then links your question to your company’s product catalog and price list. Only then is the question passed on to the LLM.

The LLM now specifically searches for an answer in all your data. The AI assistant’s IQ is the product of general training, but the answer to your specific question comes from the provided data.

These data sources aren’t static. They are reattached with each prompt. You can link databases, but also wikis full of text, emails, invoices, you name it. Your current company data is always linked to the question, giving the LLM constant access to correct and recent information.

It might take you a while to read a 2,000-page product catalog, link it to price lists and their evolution, and search for the customers involved. For an LLM, this is a matter of seconds. With RAG, you enrich the information. The LLM absorbs it and formulates an answer. The answer is generated, after being augmented with retrieved data from company systems.

- With RAG, the LLM’s capabilities come from training, but the knowledge comes from relevant data from current sources, which is cleverly added to a prompt.

Practical

RAG doesn’t require expensive LLM training. In fact, you can implement RAG with just a few lines of code. AI solution providers can take care of that. It’s up to you to get your data in order. Like all AI applications, RAG only works well if the source data is of high quality.

When the RAG system has access to your company data, it processes it into a vector database. This is a continuous process. When you then present a prompt to the LLM, the RAG system links relevant data to it via the vector database.

Linking the data isn’t an intensive process. You don’t need crazy HPC systems for it and it doesn’t cost a fortune. You link the knowledge you have to the AI brain you’ve brought in, and immediately enjoy the results.

It is important, however, that your data and the AI solution are easily connected. For example, it can help to store data in the cloud when you’re using a cloud AI solution, or to bring inference to local servers when your data is on-premises.

- RAG is easy to implement and provides tailored AI answers without incurring large training costs.

Read and Learn

Let’s look back at our analogy of the intern from the beginning. The RAG system is like an assistant sitting next to the intern, knowing roughly where all the important data is located. When you ask a question, the assistant whispers in the intern’s ear which databases, wikis, and other systems are relevant to that question. The intern is the same as before, but the answer is suddenly unique and valuable.

How RAG systems work exactly depends on implementations. Developers are constantly looking for new systems to add data to prompts more accurately, quickly, and in a more targeted manner. The essence remains the same: with RAG, you can deploy a basic LLM to generate accurate and personalized answers based on your own data.

RAG makes it possible to talk to your data in natural language. The LLM’s training provides its capabilities, your data provides the actual knowledge. This is what makes an LLM combined with RAG so powerful.

Providers

Retrieval augmented generation is part of the solutions of many AI manufacturers. AI specialist Nvidia, for example, allows developers to implement RAG via the NeMo Retriever, which is part of the company’s AI stack.

Snowflake, which collects, secures, and makes customer data accessible in a cloud platform, has also implemented RAG in its AI solutions. There, users can connect their data to an LLM of their choice via Cortex AI. Snowflake, for its part, focuses on the RAG aspect, in order to link the right data to a prompt as efficiently as possible.

Cloudera also deserves mention, with its RAG Studio. This allows users to build chatbots that are connected to their current data via RAG.

RAG also plays a role in local AI. Think of Lenovo’s AI assistant AI Now, HP’s AI Companion, Nvidia Chat with RTX, and AMD also allows you to build a chatbot that runs exclusively on your computer. These solutions allow you to ask questions to a locally running LLM, which looks at data and files in folders on your PC for answers. This is also a form of (less targeted) RAG.