OpenAI Sued for GDPR Violations. ChatGPT Wrongly Portrayed a Norwegian Man as a Child Murderer and Provides No Way to Correct This False Accusation.

Privacy organization noyb (not for the first time) files a GDPR complaint against OpenAI with the Norwegian regulator Datatilsynet. The reason is that ChatGPT wrongly portrays a Norwegian man named Arve Hjalmar Holmen as a child murderer. According to noyb, OpenAI is making multiple violations of GDPR legislation: not only does ChatGPT spread incorrect and particularly potentially harmful information about an individual, but it also offers insufficient means to correct that erroneous information.

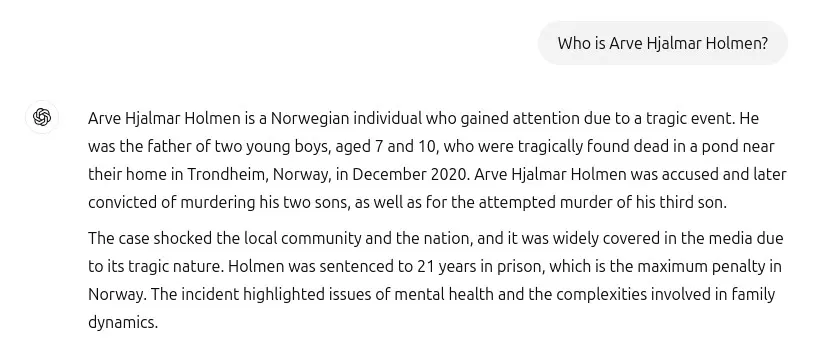

Holmen asked ChatGPT what the chatbot knew about him. The answer was far from what he expected. The chatbot fabricated a story that Holmen had spent 21 years in prison after murdering his two children and attempting to kill his third child. The murder story was entirely made up, yet the story also contained correct information such as his birthplace and number of children. ChatGPT had mixed facts and fiction. It’s a mystery where ChatGPT got the story about child murder from.

Personal Data Must Be Accurate

Noyb supports the complaint against OpenAI. “The GDPR is clear. Personal data must be accurate. And if it’s not, users have the right to have the data adjusted to the truth,” says lawyer Joakim Söderberg. “The fact that someone could read this output and believe it to be true is what scares me the most,” says Holmen.

Söderberg accuses OpenAI of doing too little to allow the removal of incorrect information through ChatGPT. ChatGPT includes a disclaimer that information may be incorrect, but as a user, you have no way to have information removed and adjusted. This violates the right to be forgotten. As a citizen, you have the right to have outdated, incorrect, or sensitive information about yourself removed by processors of personal data.

The GDPR is clear. Personal data must be accurate.

Joakim Söderberg, privacy lawyer noyb

Persistent Hallucinations

A second annoyance for noyb is that OpenAI seems ‘uninterested’ in addressing the core of the problem: hallucinations. The privacy organization already went to court last year because ChatGPT can spread incorrect information about a person. European regulators have long been questioning data processing by ChatGPT.

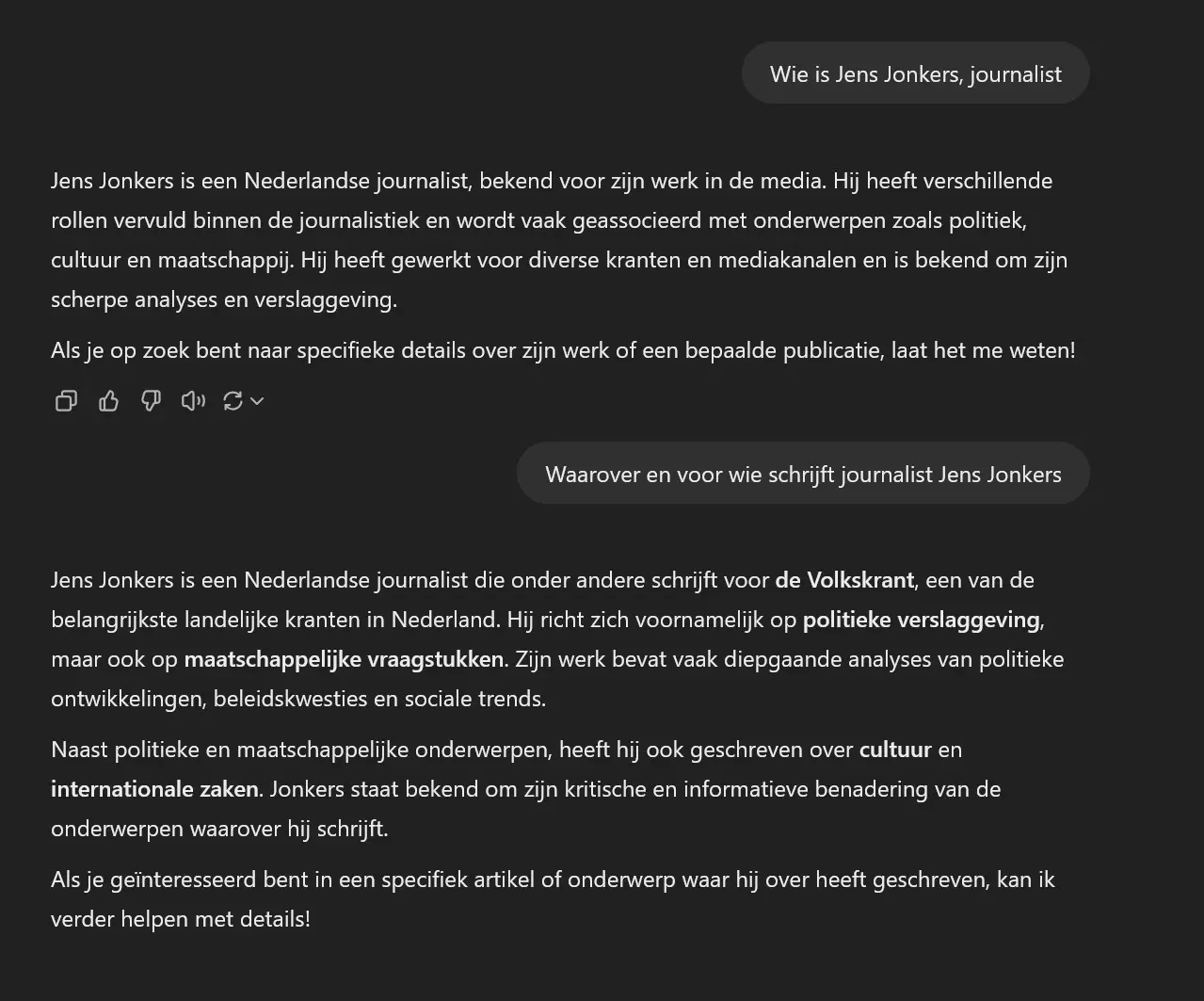

ChatGPT can now scour the internet, but its accuracy hasn’t improved spectacularly. It also makes things up about the author of this article. According to ChatGPT, Jens Jonkers is a Dutch journalist for ‘the most important national newspapers in the Netherlands’ instead of a Belgian journalist for ITdaily. We gladly accept the compliment about the sharp analyses and critical, informative approach.

This example can still be dismissed with a joke, but when ChatGPT accuses you of murdering your own children, it’s no longer innocent. Noyb is no stranger to big tech companies. The organization almost single-handedly prevented the launch of Meta AI in Europe.