Japanese company Kioxia has developed NAND memory with a capacity of 5 TB and a transfer speed of 64 GB/s. This flash memory approaches the capabilities of HBM.

Kioxia has developed a prototype flash memory module with a capacity of five terabytes and a bandwidth of 64 GB/s. The module is designed to support applications requiring large amounts of data and fast processing, such as edge computing and artificial intelligence.

Middle Ground

The development is part of the research program Post-5G Information and Communication Systems Infrastructure Enhancement R&D Project, funded by the Japanese research organization NEDO. The prototype aims to address the limitations of conventional DRAM modules, which involve trade-offs between capacity, speed, and economic feasibility.

Kioxia’s NAND memory aims to combine the advantages of a traditional SSD with the high speed of specialized DRAM or HBM memory.

Complex RAID-0

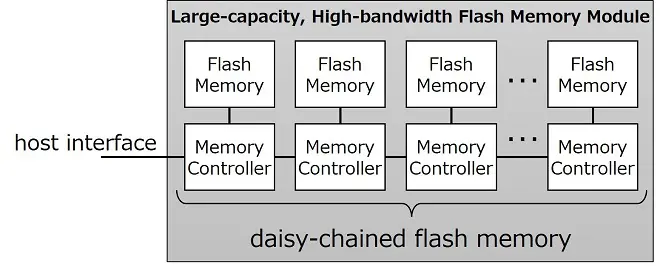

Kioxia achieves this through a daisy-chain connection at the modular level. This involves connecting memory controllers in series. The central controller for all flash modules typically forms the bottleneck for fast flash memory, and Kioxia solves this by providing each module with its own controller.

This approach makes it possible to increase capacity without sacrificing bandwidth. Additionally, the company developed transceiver technology that supports transfers of up to 128 gigabits per second. It also introduced techniques to improve flash memory performance, such as prefetching to reduce read latency and signal optimization to accelerate the memory interface.

In essence, Kioxia places flash memory in a RAID-0 configuration to create a large and very fast flash array. By implementing this technology at the level of the flash modules themselves, the manufacturer delivers a component that achieves 64 GB/s with a capacity of 5 TB.

Energy-Efficient

The module uses PCIe 6.0 as its host interface and consumes less than 40 watts. According to Kioxia, the technology can be deployed in servers for mobile edge computing, which process data closer to the user, thereby reducing latency. This is relevant for applications in IoT, big data analysis, and advanced AI models, including generative AI.

Somewhat ironically, latency is precisely the Achilles’ heel of this system, at least compared to HBM memory. True HBM memory has a latency of fractions of a nanosecond, while Kioxia’s solution remains closer to a microsecond. Algorithms and cache provide relief, but only for sequential workloads.

read also

Kioxia Showcases 5 TB Ultra-Fast NAND Memory with HBM Ambitions

In other words, Kioxia is showcasing a solution that strikes a balance between traditional flash storage and DRAM and HBM memory. The module can be relevant for numerous workloads such as AI and analytics, where capacity and throughput take precedence over pure latency.

Commercially Viable?

In theory, the prototype has its place in the hardware ecosystem. In practice, Kioxia is not the first to want to position a product between flash and DRAM. Intel and Micron tried something similar with Optane, but without success. Kioxia is now exploring opportunities to make the technology commercially available.