Nvidia reveals Rubin CPX, a new GPU custom-built for inference tasks where extensive context and processing of many tokens are crucial.

Nvidia introduces Rubin CPX. According to the manufacturer, it’s a new class of GPU. The chip is tailored for inference tasks where a large context is important.

The context refers to the number of parameters relevant for an inference task (generating an AI response). Complex workloads such as video generation require a large context and rapid processing of enormous amounts of tokens (AI data chunks). For instance, generating an hour of quality video costs one million tokens. With Rubin CPX, Nvidia is launching a chip specialized in such inference with massive context requirements.

The chip delivers up to 30 petaFlops of AI computing power, though Nvidia calculates this using its own NVFP4 data type, making the figure somewhat arbitrary. The chip has 128 GB of GDDR7 memory on board. Nvidia opts for GDDR7 over HBM as it’s more cost-effective and sufficient for inference.

Total Platform

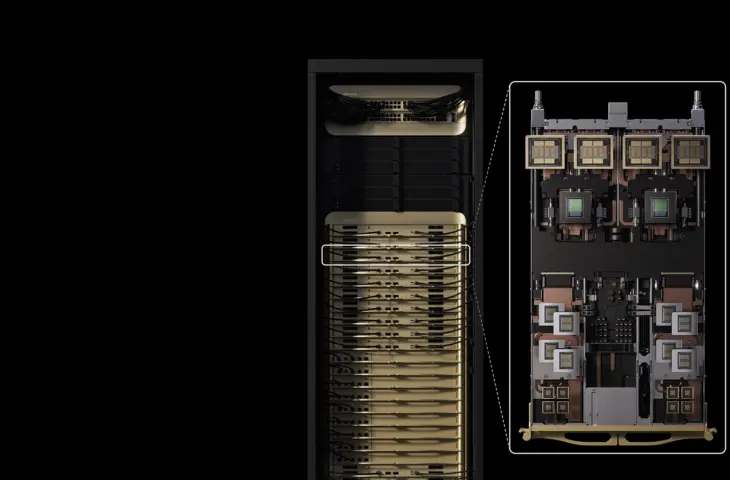

Nvidia combines the Rubin CPX chip with Vera CPUs and Rubin GPUs in the Nvidia Vera Rubin NVL144 CPX platform. This integrated solution provides eight exaFlops of (Nvidia’s own NVFP4) AI compute. The accelerator is compatible with Nvidia’s own AI software stack.

Nvidia targets large customers (and high revenue) with this solution. The company notes that every hundred million dollars invested in Rubin CPX systems could lead to five billion dollars in revenue based on tokens. This somewhat arbitrary calculation demonstrates the scale Nvidia has in mind. Nvidia Rubin CPX is set to effectively hit the market by the end of 2026.