Hot water is the solution for keeping servers cool, according to Lenovo. With some nifty innovations, the manufacturer wants to make water cooling widely accessible. HPC and AI are the initial targets, but over time everyone can benefit. The key: a solution that fits within existing data centers.

Air cooling of servers is reaching its limits. So says Scott Tease, VP of WW High-Performance Computing and AI Intelligence at Lenovo in an interview with ITdaily during Tech World in Bellevue. “All the energy you pump into a server leaves it in the form of heat,” he explains.

“If your server demands 10 kilowatts of power, then you need to remove 10 kilowatts of heat,” he continues. “That’s typically done with air conditioning, but if you want to get rid of 10 kW, you need about 4 kW of cooling.”

If you want to get rid of 10 kW of heat, you need about 4 kW of cooling.

Scott Tease, VP of WW High-Performance Computing and AI Intelligence, Lenovo

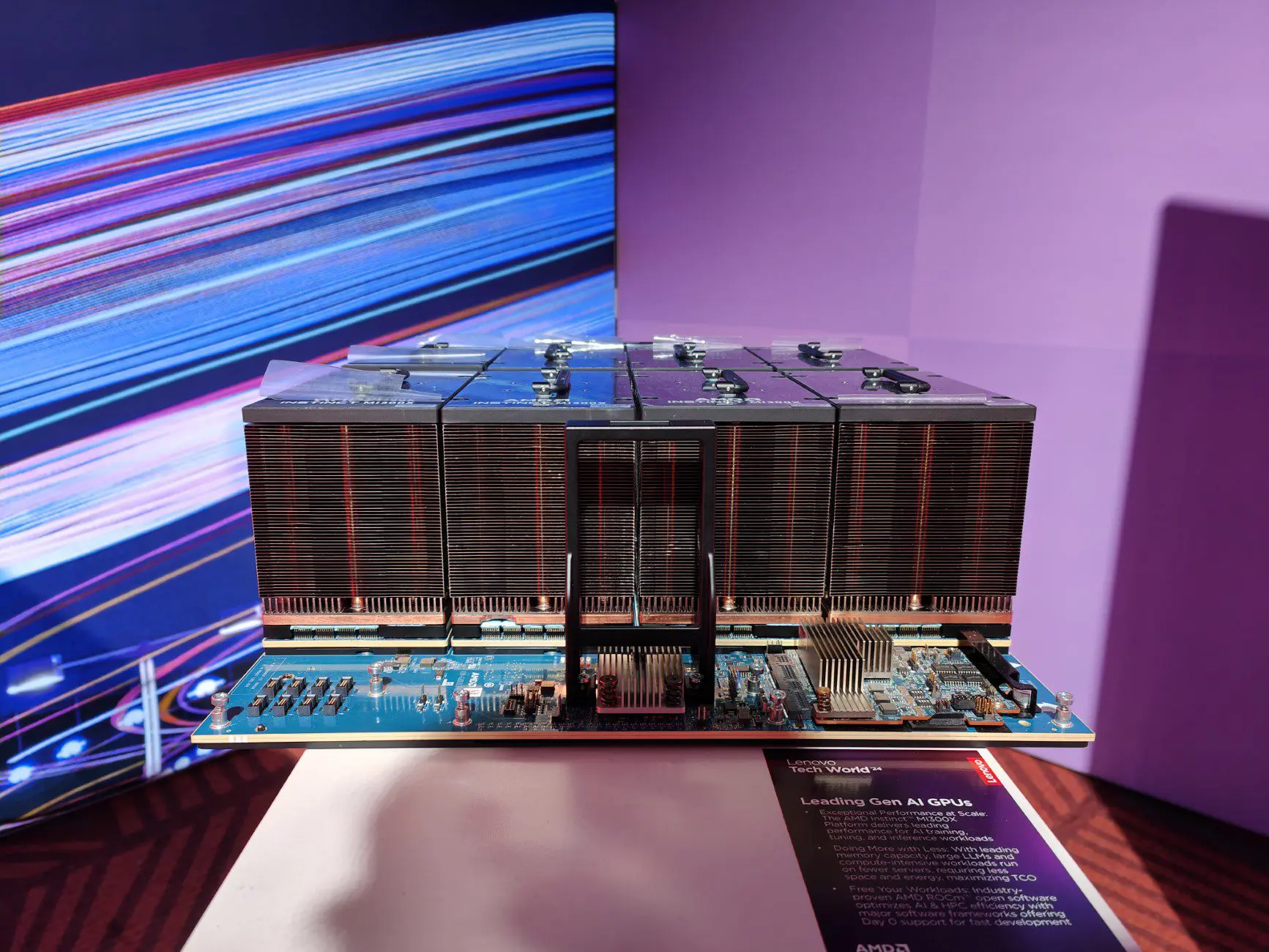

It’s not efficient, but it works. “Even HPC servers with 25 kW consumption can be cooled with air,” Tease also admits. Lenovo offers such solutions itself and is exhibiting them at Tech World. The air-cooled HPC servers are admittedly huge, and weigh a lot. The immense radiators play a big role in this.

Limit of air cooling

As servers become even more powerful, the option for air cooling will disappear. “You just can’t cool 100 kW with air,” Tease said. The solution; water cooling.

100 kW you just can’t cool with air

Scott Tease, VP of WW High-Performance Computing and AI Intelligence, Lenovo

Lenovo is not alone in realizing this. “Dell and HP also build powerful solutions,” says Tease himself. “But their racks are huge. They are 2.5 meters deep, don’t go through a door or fit in an elevator, and customers have to reinforce the floor of their data center to put them there.”

Accessibility issue

So the trick is to cool powerful servers in a more accessible way. “Our water-cooled servers are also a little wider and taller than a standard rack server,” Tease said. In other words, they don’t fit in a standard rack either.

“We put that challenge to the engineering team,” he continues. “How can we still get those oversized servers into a standard 19-inch rack.” At Tech World, Lenovo presents the solution to that issue.

The manufacturer is introducing the N1380 chassis. That is a large box in which customers can tuck HPC servers vertically. The N1380 chassis houses servers vertically, and itself does fit into a classic 19-inch rack. The chassis also has onboard PDUs(power distribution units). One rack can house three N1380 boxes. Lenovo thus opens the door to racks with capacities in excess of 100 kW, without the need for drastic modifications within the data center.

Hot water

The Neptune liquid cooling system itself works with uncooled water at room temperature. “The water going into the server may be up to 45 °C,” Tease knows. The output is water of about 55 °C. Tease: “That way, we already don’t have to put energy into cooling the water itself.”

With those temperature tolerances, the sixth-generation Neptune liquid cooling system is basically capable of keeping most data centers in Europe and the U.S. fresh, without special treatment of the water beforehand.

Lenovo can offer that fairly unique technology thanks to years of its own research. That research actually began back in the IBM era, and is based on knowledge from the mainframe industry. “When you look at the first solutions designed around 2010, you can see that Neptune is still based on that today,” Tease said. “Large tubes, minimal connectors, low flow rate and low pressure: the solution still follows the same principles.”

Lenovo develops its water-cooled servers entirely in-house, starting from the motherboard. That, according to Tease, is the key to efficiency, which in turn enables hot water cooling.

Pool heating

Obviously, users will have to connect the liquid cooling, but that is not a major challenge, according to Tease. Lenovo already has considerable experience integrating water cooling into customers’ data centers originally built for air cooling.

Tease hopes that in the future, customers will use the waste heat, for example, to heat buildings. This is not a theoretical idea: at its factory in Hungary, Lenovo itself already heats meeting rooms with hot water from its Neptune test servers.

read also

Hot water, cool servers: Nvidia’s Blackwell chips as pool heaters

“You can simply connect Neptune’s water lines to pipes, and pump the water to somewhere else,” he explains. “Think of a university, for example, where the hot water from the data center can heat up the pool water via a heat exchanger. In this way, the hot water is no longer a waste product, but can be recycled for energy-saving purposes.”

Low PUE

The integration of water-cooling itself, also brings a lot of savings already. “Everything starts with the fans,” says Tease. “Fans are responsible for 10 to 15 percent of the power consumption of air-cooled servers. It takes a lot of energy to move air.”

With Neptune-cooled servers, they no longer need fans, and because the water cooling removes all residual heat from the servers, the data center doesn’t need air conditioning either.

“That’s how we save a tremendous amount of power,” Tease explains. “An air-cooled data center typically has a power usage effectiveness (PUE) of 1.4 or 1.6, meaning that forty to sixty percent of the power goes not to the servers but to cooling. With Neptune, the PUE drops below 1.1. A PUE of 1.07 is easily achievable.”

In other words, water cooling helps save up to 40 percent in power compared to the same workloads supported by air cooling.

AI has really accelerated the interest in water cooling.

Scott Tease, VP of WW High-Performance Computing and AI Intelligence, Lenovo

“We initially developed the technology for HPC customers,” Tease explains. “But AI is now going to be the biggest beneficiary. AI has really accelerated the interest in water cooling.”

Also for enterprise?

The numbers Tease is flaunting also seem interesting outside of AI and HPC workloads. More classic servers can also benefit from water cooling. A lower PUE means a smaller environmental impact and a lower operating cost.

“There is effectively more interest than ever,” agrees Tease, “but still not as strong from outside HPC and AI. With sustainability and ESG goals, there are more motivating factors than ever to embrace water cooling, but we don’t see a big boost in the enterprise segment yet. Not yet, because it will come eventually.”

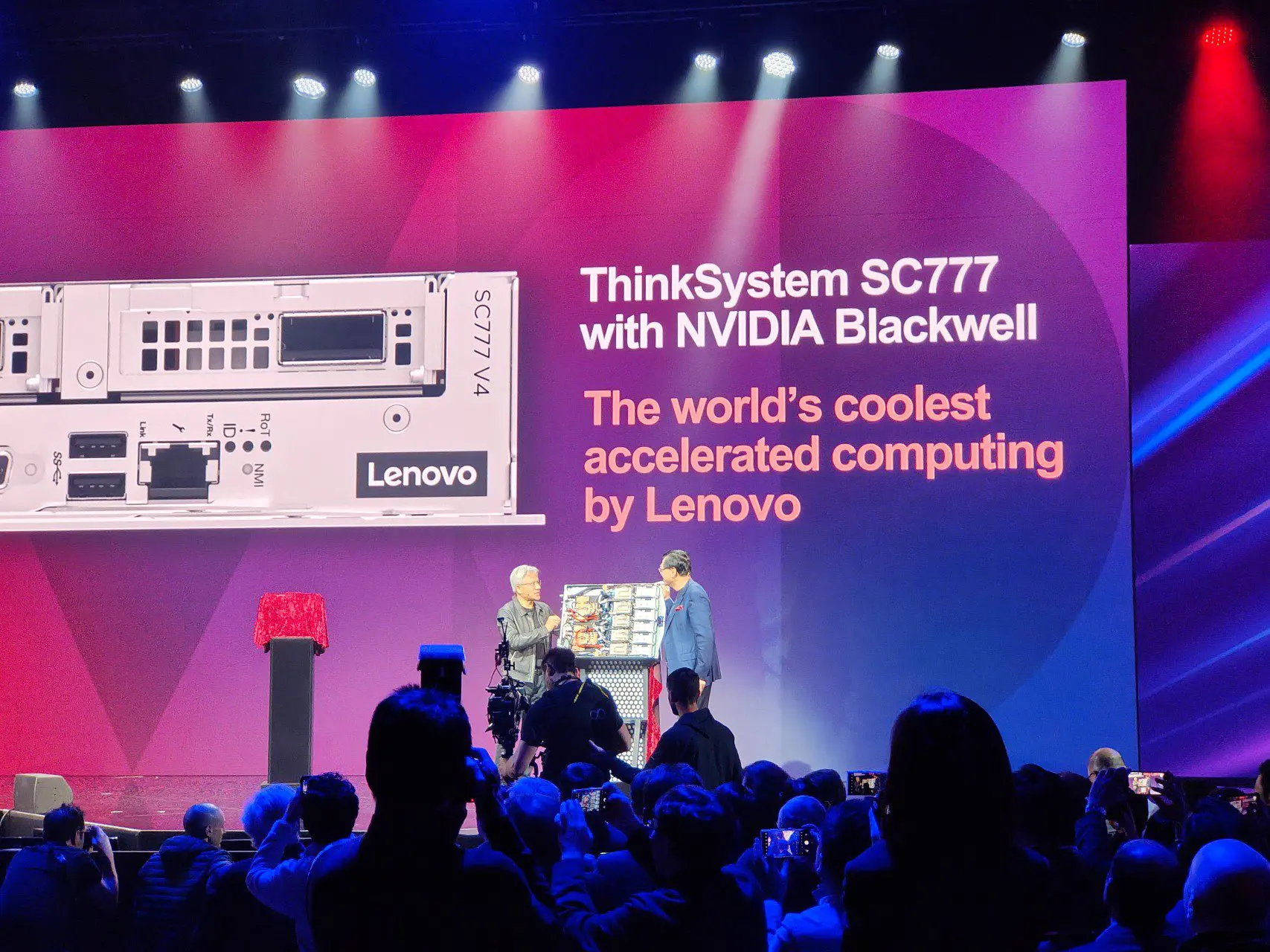

Sexy server

At Tech World, Lenovo screens the brand-new SC777: an AI server cooled by Neptune water cooling and equipped with two Nvidia GB200 Blackwell superchips. CEO Yuanqing Yan presents the server together with Nvidia boss Jensen Huang on stage. “Isn’t this wonderful,” Huang said at the introduction. “For an engineer, this device is sexy.”

For an engineer, this device is sexy.

Jensen Huang, CEO Nvidia

“That SC777 consumes fifteen times more power than the first nodes we cooled with Neptune about fifteen years ago,” Tease adds. “That seems like a lot, but each SC777 is about a thousand times more performant than the servers back then. The devices consume more, but the performance per watt has improved exponentially.

Relatively accessible

Neptune and the N1380 chassis make the SC777 relatively accessible. Customers can provision classic racks of the AI servers, in a classic data center, thanks to Neptune. Of course, that’s not to say that everyone can suddenly install Blackwell AI servers, leaving aside the price tag of things.

read also

More than a hardware farmer: Lenovo surfs AI wave toward big ambitions

Tease: “For a long time, the limiting factor in rolling out sufficient computing power was budget and number of computing cores. Today, it’s actually the available power.” Injecting more power into the grid, Lenovo cannot. Accessible Neptune solutions, however, should ensure that the power available to an organization goes as much as possible to the IT hardware and not to cooling.

Secret sauce

Tease believes that’s why Neptune gives Lenovo an advantage. “Other manufacturers could probably copy our solution, if they really wanted to,” he suspects. “But that doesn’t give them our experience in converting classic data centers to water cooling. That experience is our secret sauce.”