HPE launches a rack-scale AI architecture around AMD’s new Helios design, equipped with a lightning-fast Broadcom Tomahawk 6 network chip. The solution targets cloud providers looking to deploy AI training and inference on a large scale.

HPE introduces a new rack-scale AI architecture that combines AMD’s Helios platform with an ethernet-based switch developed by HPE Juniper Networking. The solution offers cloud service providers a fully integrated rack designed for workloads such as training models with trillions of parameters and large-scale inference.

Rack-scale AI aimed at growing demand for compute

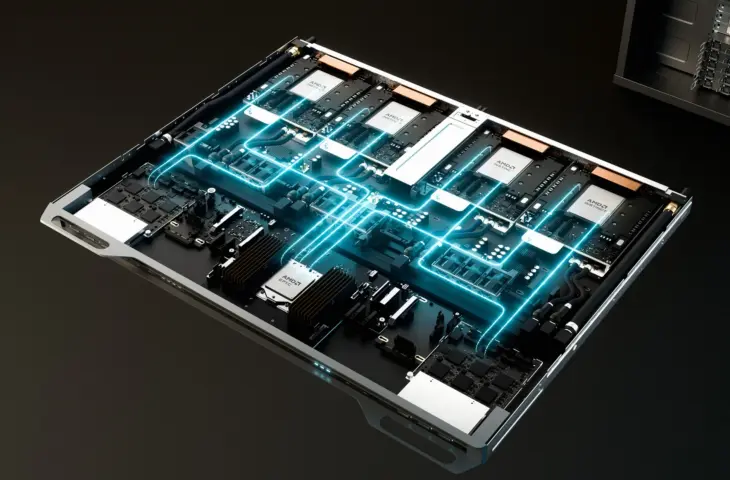

Just before HPE Discover Barcelona 2025, the brand announces a rack with 260 TB/s scale-up bandwidth and 2.9 exaflops FP4 computing power thanks to 72 linked AMD Instinct MI455X GPUs. With this, HPE targets organizations looking to further scale AI clusters while maintaining flexibility and open standards. Configurations are possible up to 31 TB HBM4 memory and 1.4 PB/s memory bandwidth.

The rack architecture follows the Open Rack Wide specifications of the Open Compute Project (OCP). This standard introduces a double-wide rack setup with liquid cooling and a layout designed to simplify maintenance. The emphasis on open standards aligns with HPE’s previously demonstrated strategy to avoid vendor lock-in.

Ethernet as a scale-up network for AI training

A notable element of the solution is the use of ethernet for scale-up network traffic within the rack. The new switch platform, developed with Broadcom’s Tomahawk-6 silicon, utilizes Ultra Accelerator Link over Ethernet (UALoE).

By using ethernet as a connection layer, HPE aims to offer an alternative to proprietary interconnect technologies. This fits within HPE’s broader AI network strategy, where scale-up, scale-out, and scale-across network layers complement each other within AI data centers.

The new switch platform integrates with existing HPE network components such as the QFX5250 scale-out switch and PTX routers, as building blocks for AI cluster connections within and between data centers.

read also

HPE Expands its Cray Supercomputers with New Components

Integration of liquid cooling and HPE services

The system comes with support from HPE’s service teams, who have experience with liquid-cooled infrastructure and exascale installations. HPE repeatedly emphasizes that liquid cooling is essential for new AI generations, including the QFX5250 switch and the Helios rack itself. The integrated cooling is intended to reduce energy consumption and keep densely packed GPU clusters running stably.

The rack also supports AMD’s ROCm software and Pensando technology for network acceleration and security. With this combination, HPE aims to provide companies with an open architecture suitable for rapid iteration of AI models and large-scale deployment scenarios.

The AMD Helios AI Rack will be available worldwide in 2026.